Five teams’ projects

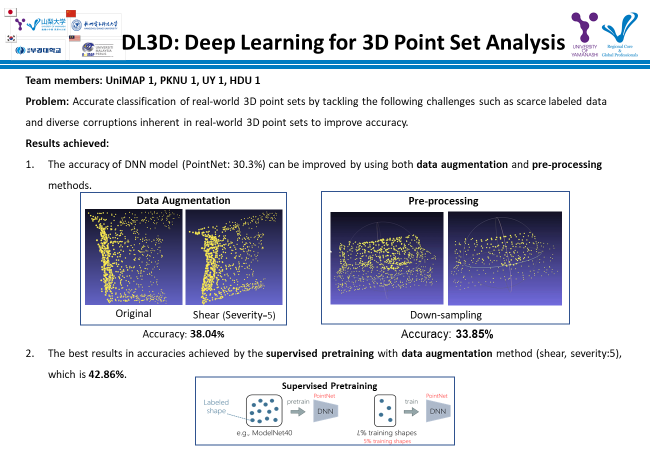

Team A: DL3D/Deep Leaning for 3D Point Set Analysis

Team members: UniMAP 1, PKNU 1, UY 1, HDU 1

Research problem:

→Accurate classification of real-world 3D point sets by tackling the following challenges such as scarce labeled data and diverse corruptions inherent in real-world 3D point sets to improve accuracy.

Results achieved:

1. The accuracy of DNN model (PointNet: 30.3%) can be improved by using both data augmentation and pre-processing methods.

2. The best results in accuracies achieved by the supervised pretraining with data augmentation method (shear, severity:5), which is 42.86%.

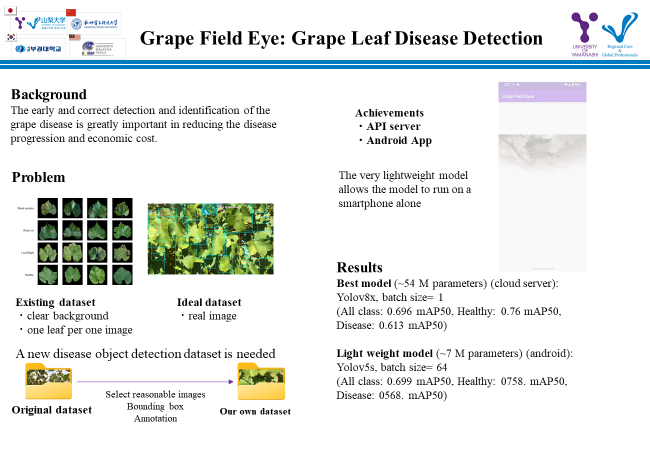

Team B: Grape Field Eye/ Grape Leaf Disease Detection

Team members: UniMAP 2, PKNU 1, UY 1, HDU 1

Research problem:

→The early and correct detection and identification of the grape disease is greatly important in reducing the disease progression and economic cost.

→Need a very lightweight model allows the model to run on a smartphone alone.

Results achieved:

1. Best model (~54 M parameters) (cloud server): Yolov8x, batch size= 1

(All class: 0.696 mAP50, Healthy: 0.76 mAP50, Disease: 0.613 mAP50)

2. Light weight model (~7 M parameters) (android): Yolov5s, batch size= 64

(All class: 0.699 mAP50, Healthy: 0758. mAP50, Disease: 0568. mAP50)

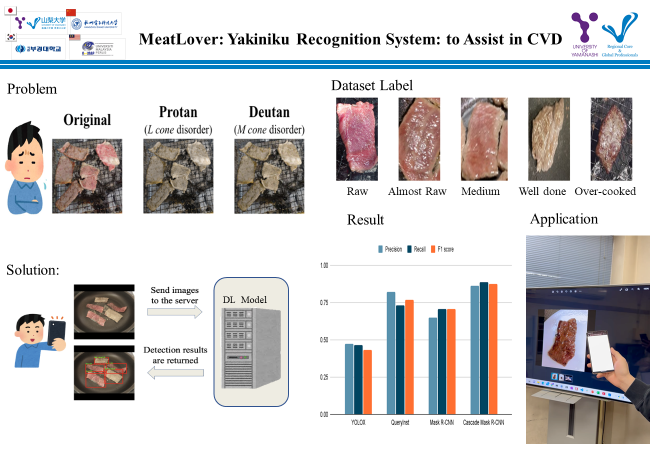

Team C: Meat Lover/Yakiniku Recognition System: to Assist in CVD

Team members: UniMAP 2, PKNU 1, UY 1, HDU 1

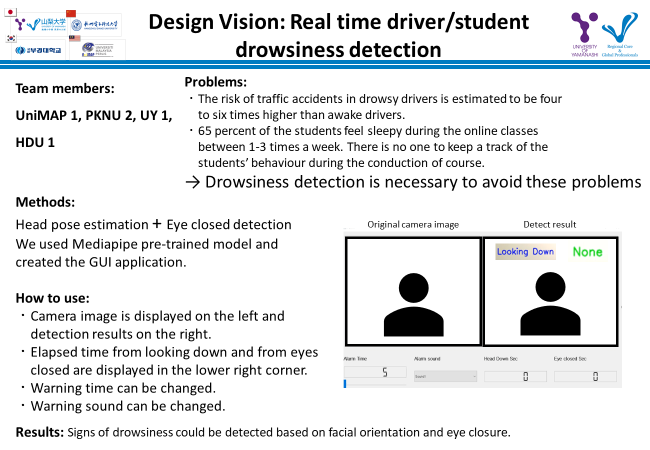

Team D: Design Vision/Real Time Driver/Student Drowsiness Detection

→Team members: UniMAP 1, PKNU 2, UY 1, HDU 1

Research problem:

→The risk of traffic accidents in drowsy drivers is estimated to be four to six times higher than awake drivers.

→65 percent of the students feel sleepy during the online classes between 1-3 times a week. There is no one to keep a track of the

students’ behavior during the conduction of course.

→ Drowsiness detection is necessary to avoid these problems

Methods: Head pose estimation + Eye closed detection

We used Media-pipe pre-trained model and created the GUI application.

How to use:

・Camera image is displayed on the left and

detection results on the right.

・Elapsed time from looking down and from eyes

closed are displayed in the lower right corner.

・Warning time can be changed.

・Warning sound can be changed.

Results achieved:

1. Signs of drowsiness could be detected based on facial orientation and eye closure.

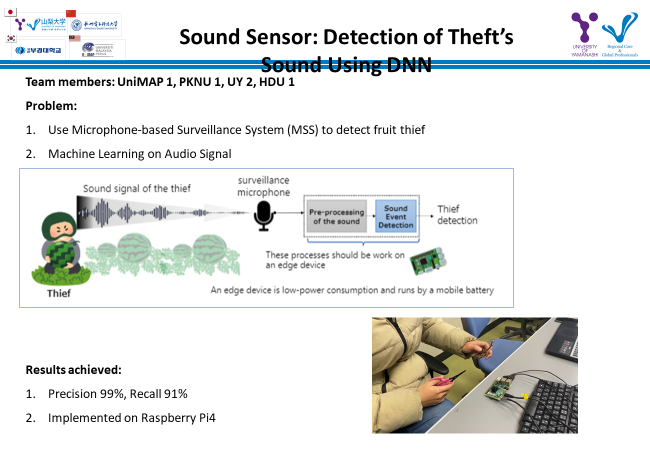

Team E: Sound Sensor/Detection of Theft's Sound Using DNN

Team members: UniMAP 1, PKNU 1, UY 2, HDU 1

Research problem:

→Use Microphone-based Surveillance System (MSS) to detect fruit thief

→Machine Learning on Audio Signal

Results achieved:

1. Precision 99%, Recall 91%

2. Implemented on Raspberry Pi4